What is DALL-E Mini

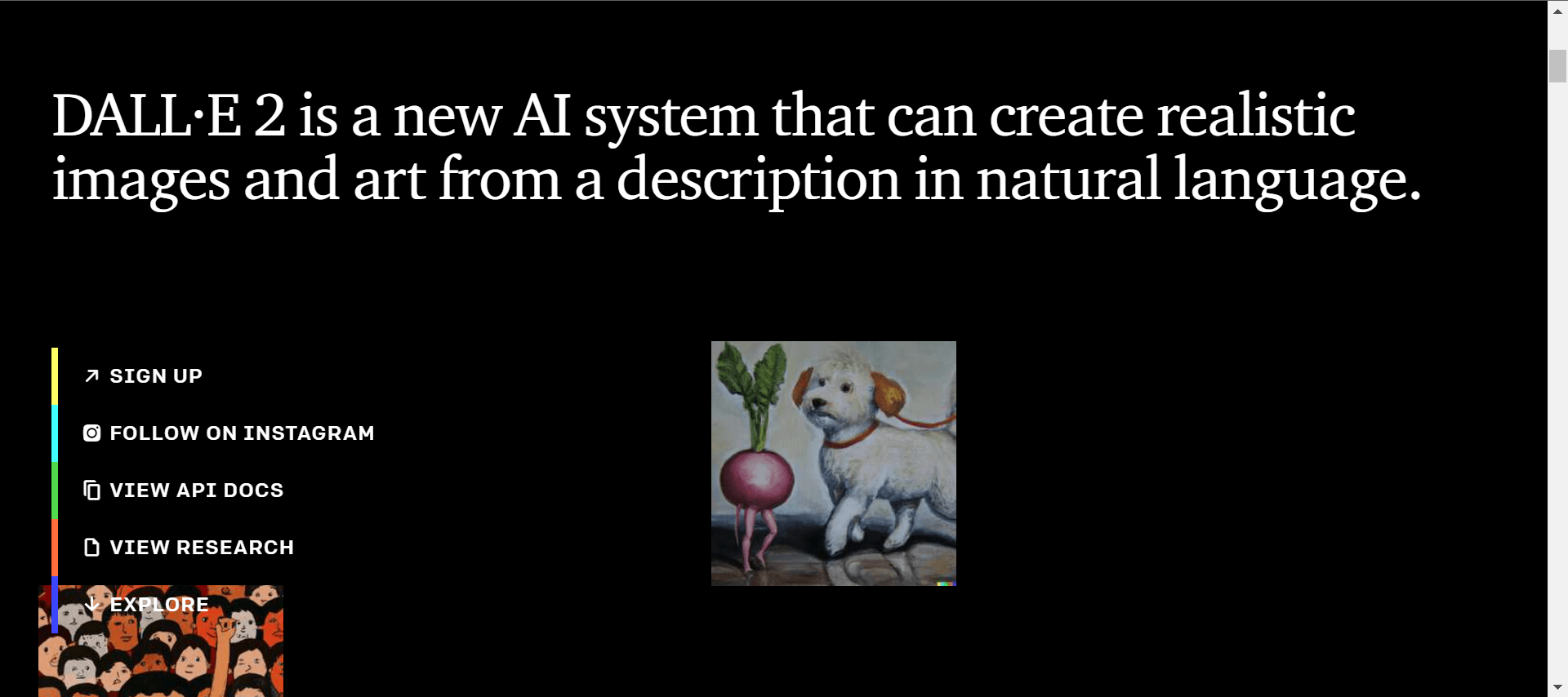

The DALL-E mini uses an open source AI model inspired by OpenAI technology. It can recognize images from text inputs and create high-resolution images. The model is trained on millions of images from the internet. It also learns to draw images from text prompts. The accuracy of DALL-E mini depends on how many images it is trained on. It is not the only AI art service available. A similar service called Midjourney is in private beta, with a waitlist. The images produced are stunning. However, access to the service is limited and its terms of service are unclear.

The DALL-E mini uses an open source AI model inspired by OpenAI technology. It can recognize images from text inputs and create high-resolution images. The model is trained on millions of images from the internet. It also learns to draw images from text prompts. The accuracy of DALL-E mini depends on how many images it is trained on. It is not the only AI art service available. A similar service called Midjourney is in private beta, with a waitlist. The images produced are stunning. However, access to the service is limited and its terms of service are unclear.

what features DALL-E has?

The DALL-E Mini is a machine-learning project that makes use of images taken from the web. While the system can make some predictions, it has been found that the output is often distorted, with bodies missing or distorted objects. Users have had some fun comparing the results of the system with what the user had asked for. However, the system was not trained to pick up on sexual or explicit content, so it has taken some surprising turns.I It based on a seq2seq model, which is commonly used in computer vision and natural language processing. This model can be used to recognize objects from a scene and translate them into meaningful tokens. This technique can be transferred to other applications, such as image recognition and computer vision.

The DALL-E mini uses algorithms and math to build an image recognition program. It learns from images and searches for patterns in their captions, such as colors and shapes. It then uses this knowledge to create similar images. While this program has many uses, some users have found it disturbing.Having been trained on 15 million pairs of images and text. Its yield may be improved with a larger dataset and more training time. Until then, the system is still in the developmental stages. However, the potential for further improvements is huge.

DALL-E Mini is an open-source project which uses AI to create realistic images of text. It is based on the original Dall-E technology from OpenAI. Users have shared their reactions with the project on social media. While the AI can be scary, it has also created some fun in people’s lives.

DALL-E mini is a web application created by a Texas-based computer engineer. It was originally dubbed DALL-E mini but has since been renamed to Craiyon after a request from OpenAI. It has been developed in collaboration with various AI research communities.

Competitor

After the success of DALL-E 2, another light-weight, open-source competitor emerged: Craiyon. This new device is similar in accuracy to the original, but is also made by Google. While this competitor hasn’t yet reached viral fame, it’s promising technology.

In its latest development, the rival of DALL-E is an AI created by Google. Imagen combines the depth of language understanding and photorealism of a human with its ability to reproduce cultural and social biases. However, while Imagen isn’t anywhere near as realistic as DALL-E Mini, it does carry the risk of biasing the images it produces.

This machine is designed by UC Berkeley graduate student Charlie Snell. It consists of three pieces: an autoencoder, a transformer, and an algorithm that understands how an image correlates to a textual description. Another piece ranks the images and prioritizes those with the best quality. It works by taking the text prompt and turning it into an image of interest. it has been trained by looking at millions of images on the internet. It builds its database by studying captions. It can produce images that are beautiful and frightening. However, some users have complained that the software isn’t perfect. It also struggles to recognise faces.

Why it is Ethics?

With more artists turning to DALL-E for artwork, there are ethical issues that need to be addressed. First, it’s important to provide proper training to artists working on the project. Second, because the project will feature so many images, it’s important to make sure that no particular race, gender, or ethnicity is hurt. Finally, artists should be aware of the need to represent people from diverse backgrounds.

A DALL-E mini is a text-to-image computer that turns text phrases into images. The system is trained by analyzing millions of images on the internet. This allows it to identify unusual images. It’s still far from perfect, but it’s still a step in the right direction. While the full model of DALL-E is capable of producing clean images, the mini version is designed to mimic the style of the internet and provide surreal images. Some of the best examples of these images come from the r/weirddalle subreddit.

The DALL-E mini’s content policy sets out guidelines for its users. While users are free to create anything they want, they should avoid allowing content that violates their policies. This means that there are a few things users should watch out for, and DALL-E needs to continue to develop its safety measures.

conclusion

While DALL-E is delightful, it is also risky. There are many ethical issues arising from its use of artificial intelligence. For example, the technology could be used by people to create pornography or political deepfakes, putting human illustrators out of work. It could also reinforce harmful stereotypes and biases. Those are just a few of the ethical questions that DALL-E mini raises.

DALL-E’s creator has said that he’s addressed the issue of bias in the program. Nevertheless, he has not said whether AI systems should be able to control harmful prompts or report certain results to ensure that they’re not exploited by people. He added that he’s working on these issues at Hugging Face.